Classification

Introduction

Classification is a fundamental task in supervised machine learning where the goal is to predict the categorical class label of new observations based on past observations with known labels.

In classification tasks, the data consists of instances (or samples) with features (or attributes) and corresponding class labels. The task is to build a model that learns the relationship between the features and the class labels from the training data. Once the model is trained, it can be used to predict the class labels of new, unseen instances.

Here's how Classification typically works:

- Data Collection: The first step is to collect a labeled dataset consisting of instances with features and corresponding class labels. Each instance represents a data point, and the features describe its characteristics. The class labels indicate the categories or classes to which the instances belong.

- Data Preprocessing: Before building a classification model, it's essential to preprocess the data. This may involve tasks such as handling missing values, encoding categorical variables, scaling or normalizing numerical features, and splitting the data into training and testing sets.

- Model Selection: Next, you select an appropriate classification algorithm based on the nature of the data and the problem you're trying to solve. Common algorithms include logistic regression, decision trees, random forests, support vector machines (SVM), k-nearest neighbors (KNN), naive Bayes, and neural networks.

- Model Training: Once you've chosen a classification algorithm, you train the model on the training data. During training, the model learns the relationship between the features and the class labels from the labeled examples in the training set. The goal is to find the optimal parameters that minimize the error between the predicted class labels and the true class labels.

- Model Evaluation: After training the model, you evaluate its performance using the testing data. You use metrics such as accuracy, precision, recall, F1-score, and confusion matrix to assess how well the model generalizes to new, unseen data. Cross-validation techniques may also be used to ensure robustness and avoid overfitting.

- Model Deployment: Once the model has been trained and evaluated, it can be deployed to make predictions on new, unseen instances. The model takes the features of a new instance as input and predicts the most likely class label based on the learned decision boundary or decision function.

- Monitoring and Maintenance: After deployment, it's essential to monitor the performance of the model in production and update it as needed. This may involve retraining the model periodically with new data, fine-tuning hyperparameters, and handling concept drift or changes in the underlying data distribution.

Classification is widely used in various applications, including:

- Spam Detection: Classifying emails as spam or non-spam based on their content and metadata.

- Medical Diagnosis: Predicting whether a patient has a particular disease based on their symptoms and medical history.

- Image Recognition: Identifying objects or patterns in images, such as handwritten digits, faces, or objects in satellite imagery.

- Sentiment Analysis: Classifying text documents or social media posts as positive, negative, or neutral based on their sentiment.

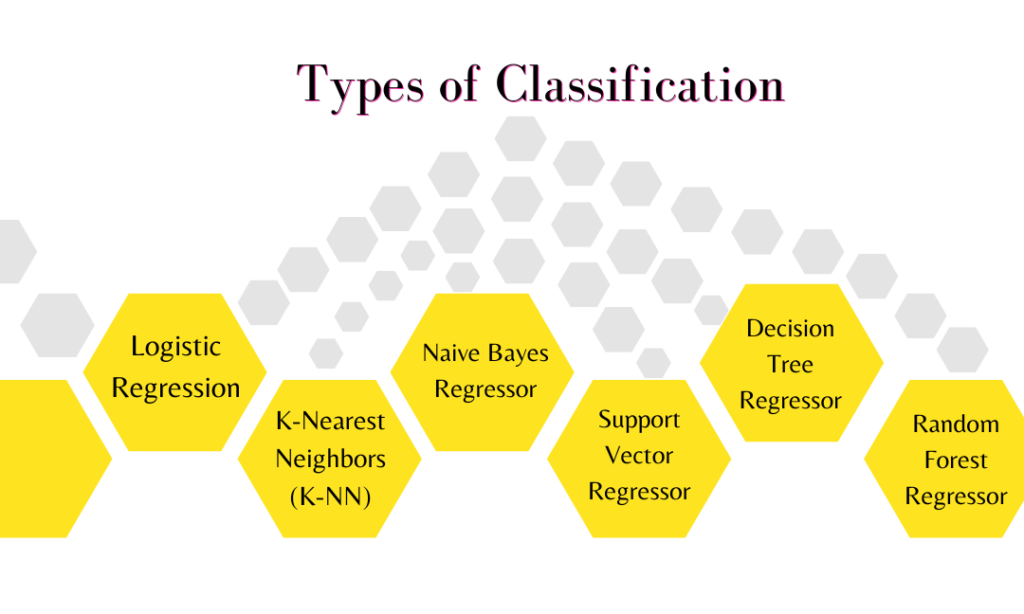

Types of Classification used in supervised machine learning:

- Logistic Regression: Logistic regression is a linear classification algorithm that models the probability that an instance belongs to a particular class. It uses the logistic function to ensure that the predicted probabilities fall between 0 and 1, making it suitable for binary classification tasks. Despite its name, logistic regression is used for classification rather than regression, and it's valued for its simplicity and interpretability.

- K-Nearest Neighbors (K-NN): K-Nearest Neighbors is a simple and intuitive classification algorithm that classifies an instance based on the majority class of its K nearest neighbors in the feature space. KNN is non-parametric and lazy, meaning it does not learn a model during training but instead stores all training instances and performs classification at prediction time.

- Naive Bayes: Naive Bayes is a probabilistic classification algorithm based on Bayes' theorem and the assumption of independence between features. Despite its simplifying assumption (naive independence), Naive Bayes often performs well in practice, especially for text classification and other high-dimensional datasets.

- Support Vector Regressor: Support Vector Machines are powerful classification algorithms that find the optimal hyperplane that separates different classes in the feature space. SVM aims to maximize the margin between the classes, making it robust to outliers and noise in the data. It can handle both linear and nonlinear classification tasks using different kernel functions.

You Might Also Like