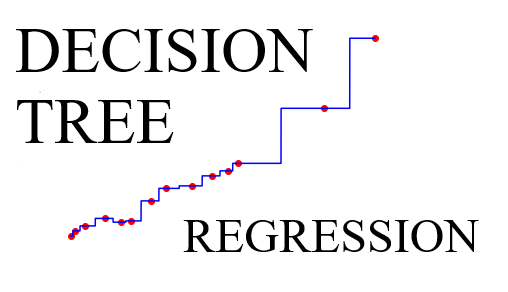

Decision Tree Regression

Introduction

Decision Tree Regression is a supervised learning algorithm used for regression tasks. It works by partitioning the feature space into smaller regions and fitting a simple model (usually a constant value) to each region. It’s a non-parametric method, meaning it makes no assumptions about the underlying data distribution and can capture complex relationships between features and targets.

Here's how Decision Tree Regression works:

- Tree Construction

- The algorithm starts with the entire dataset and recursively splits it into smaller subsets based on the values of features. It selects the feature and split point that best separates the data according to a criterion (e.g., minimizing variance or mean squared error).

- The process continues until a stopping criterion is met, such as reaching a maximum depth, having a minimum number of samples in each leaf node, or no further improvement in the splitting criterion.

- Prediction

- Once the tree is constructed, predictions are made by traversing the tree from the root node to a leaf node based on the feature values of the input data point.

- At each internal node, the algorithm compares the feature value of the data point with a threshold and decides which branch to follow based on whether the feature value is less than or greater than the threshold.

- When reaching a leaf node, the predicted value is the constant value associated with that leaf node.

- Model Interpretation

- Decision trees are interpretable models, allowing users to understand the decision-making process. Users can visualize the tree structure to see how features are being used to make predictions.

- Decision trees can capture non-linear relationships between features and targets and handle interactions between features effectively.

- Hyperparameter Tuning

- Decision tree regression has hyperparameters that control the tree's complexity and prevent overfitting, such as the maximum depth of the tree, minimum samples required to split an internal node, and minimum samples required to be at a leaf node.

- Hyperparameter tuning is crucial to finding the optimal balance between model complexity and performance.

- Handling Missing Values

- Decision trees can handle missing values in the dataset by effectively splitting the data based on available features.

Decision Tree Regression is versatile and can be applied to various regression tasks. However, it's prone to overfitting, especially when the tree is allowed to grow too deep. Techniques like pruning, limiting the tree depth, and using ensemble methods like Random Forests can help mitigate overfitting and improve model generalization.

Let's walk through an example of Decision Tree Regression using a synthetic dataset to predict house prices based on their size (in square feet). We'll use the DecisionTreeRegressor implementation from scikit-learn library in Python.

Here's how to do it step by step:

- Generate Synthetic Data

import numpy as np

import pandas as pd

# Generate synthetic data

np.random.seed(0)

n_samples = 1000

size_sqft = np.random.randint(800, 3000, size=n_samples) # Random square footage (800 to 3000 sqft)

price = 50000 + 100 * size_sqft + np.random.normal(0, 10000, size=n_samples) # Generate price with noise

# Create DataFrame

data = pd.DataFrame({'Size_sqft': size_sqft, 'Price': price})

- Explore the Data

print(data.head())

print(data.describe())

- Data Preprocessing

X = data[['Size_sqft']] # Features

y = data['Price'] # Target variable

- Split Data into Train and Test Sets

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

- Create and Fit the SVR Model

from sklearn.tree import DecisionTreeRegressor

model = DecisionTreeRegressor(max_depth=5, random_state=42)

model.fit(X_train, y_train)

- Make Predictions

y_pred = model.predict(X_test)

- Evaluate the Model

from sklearn.metrics import mean_squared_error, r2_score

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print("Mean Squared Error:", mse)

print("R-squared:", r2)

- Visualize Results

import matplotlib.pyplot as plt

plt.scatter(X_test, y_test, color='blue') # Plot test data

plt.scatter(X_test, y_pred, color='red', marker='x', label='Predictions') # Plot predicted values

plt.xlabel("Size (sqft)")

plt.ylabel("Price")

plt.title("Decision Tree Regression: House Size vs. Price")

plt.legend()

plt.show()