Random Forest Classification

Introduction

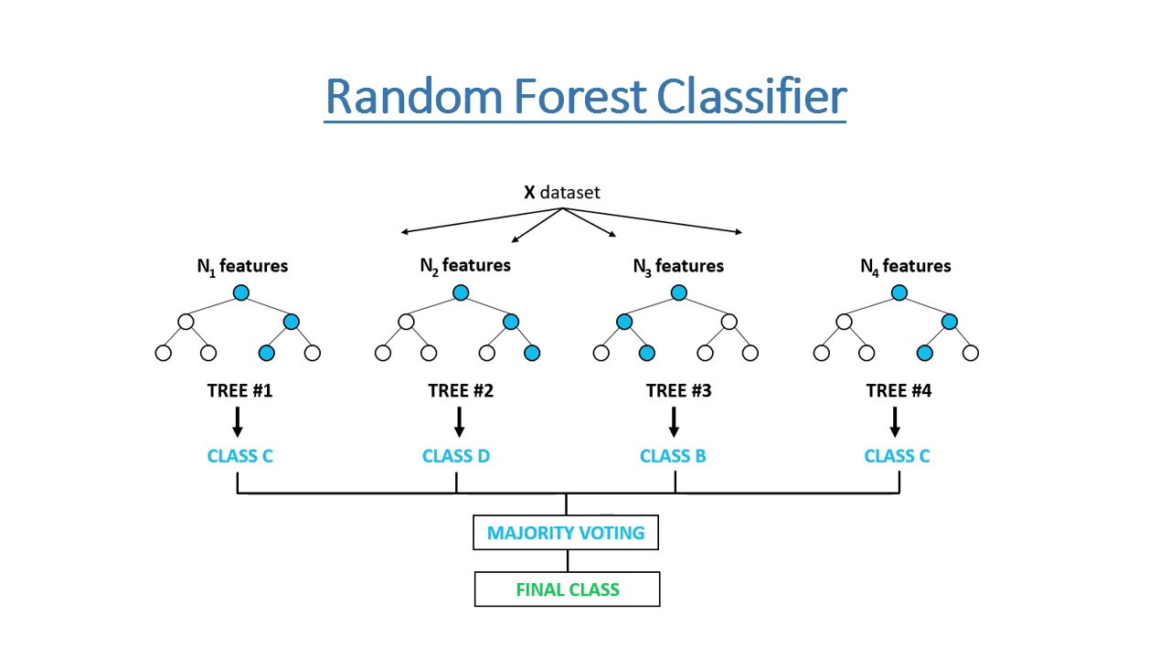

Random Forest Classifier is an ensemble learning method based on the concept of averaging multiple decision trees. It builds multiple decision trees during training and outputs the class that is the mode of the classes (classification) or the mean prediction (regression) of the individual trees.

Here's how Random Forest Classifier works:

- Bootstrap Sampling (Bagging):

Random Forest starts by randomly selecting samples with replacement (bootstrap sampling) from the original dataset to create multiple subsets of data for each tree

- Random Feature Selection

For each tree in the forest, a random subset of features is selected at each node of the tree. This introduces diversity among the trees and helps prevent overfitting

- Decision Tree Building

A decision tree is built using the selected subset of samples and features. Each tree is typically grown to its maximum depth without pruning

- Voting or Averaging

- For classification tasks, the predicted class of each tree is recorded. The final prediction is determined by taking the majority vote (mode) of all the individual tree predictions.

- For regression tasks, the predicted values of each tree are averaged to obtain the final prediction.

- Ensemble learning

By combining multiple decision trees, Random Forest reduces overfitting and improves generalization performance. It also provides a more robust prediction by reducing the variance of individual trees.

- Hyperparameters

Random Forest has several hyperparameters that can be tuned to optimize its performance, such as the number of trees (n_estimators), the maximum depth of each tree (max_depth), the minimum number of samples required to split a node (min_samples_split), etc.

- Out-of-Bag (OOB) Error Estimation

Random Forest can estimate its performance during training using out-of-bag samples, which are the samples not included in the bootstrap sample used to train each tree. This provides a reliable estimate of the model's performance without the need for a separate validation set.

Random Forest Classifier is widely used for classification tasks in various domains due to its robustness, scalability, and ability to handle high-dimensional data. It is less prone to overfitting compared to individual decision trees and often achieves high accuracy with minimal hyperparameter tuning.

Let's consider an example of using the Random Forest Classifier for a binary classification task using the famous Iris dataset:

- We import the necessary libraries and modules from scikit-learn

import numpy as np

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

- We load the Iris dataset using the load_iris function from scikit-learn

iris = load_iris()

X = iris.data

y = iris.target

- We split the data into training and testing sets using the train_test_split function

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

- We create an instance of the RandomForestClassifier class with 100 trees (n_estimators=100) and set the random state for reproducibility.

model = RandomForestClassifier(n_estimators=100, random_state=42)

- We fit the classifier to the training data using the fit

model.fit(X_train, y_train)

- We make predictions on the test data using the predict

y_pred = model.predict(X_test)

- We evaluate the model's performance using metrics such as accuracy, confusion matrix, and classification report.

accuracy = accuracy_score(y_test, y_pred)

conf_matrix = confusion_matrix(y_test, y_pred)

class_report = classification_report(y_test, y_pred)

print("Accuracy:", accuracy)

print("Confusion Matrix:\n", conf_matrix)

print("Classification Report:\n", class_report)

This example demonstrates how to use the Random Forest Classifier for a binary classification task using the Iris dataset. The Random Forest Classifier learns to classify iris flowers into three species (setosa, versicolor, virginica) based on features such as sepal length, sepal width, petal length, and petal width. By combining multiple decision trees, Random Forest reduces overfitting and improves the overall performance of the model.