Random Forest Regression

Introduction

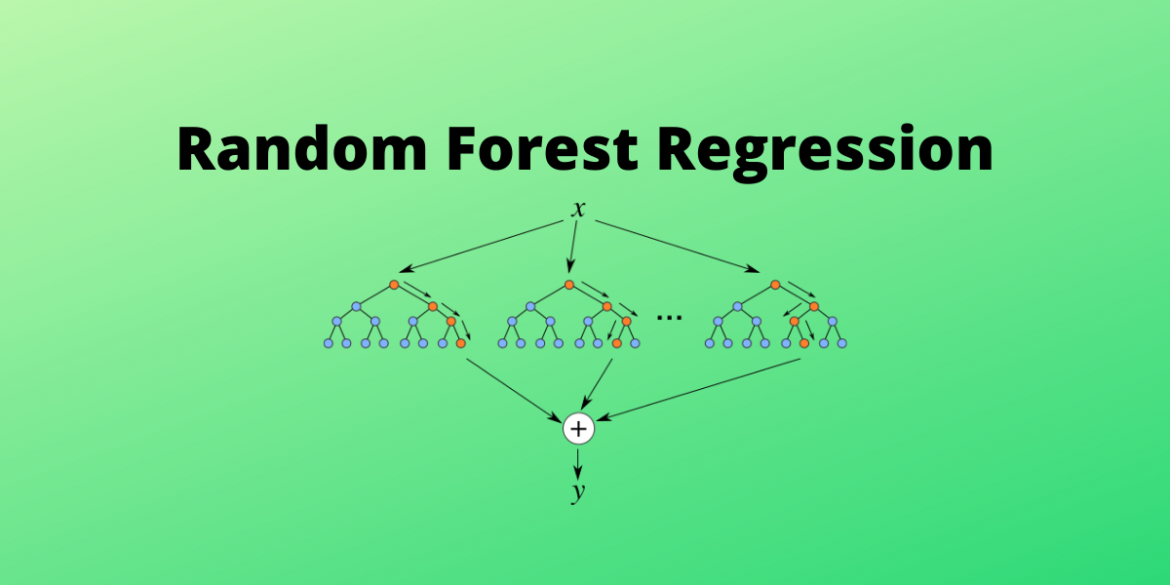

Random Forest Regression is an ensemble learning method used for regression tasks. It’s an extension of the decision tree algorithm and operates by constructing a multitude of decision trees during training and outputting the average prediction of the individual trees for regression problems.

Here's how Random Forest Regression works:

- Bootstrapping

Random Forest starts by randomly selecting a subset of the training data (with replacement), known as a bootstrap sample. This bootstrap sample is used to train each decision tree in the forest.

- Random Feature Selection

During the construction of each decision tree in the forest, a random subset of features is selected at each node to determine the best split. This process introduces randomness and helps to decorrelate the trees.

- Decision Tree Construction

Each decision tree in the Random Forest is constructed using a subset of the training data and a random subset of features at each split. The trees are typically grown to their maximum depth without pruning.

- Aggregation of Predictions

For regression tasks, the predictions of all trees in the forest are averaged to obtain the final prediction. This ensemble averaging helps to reduce overfitting and improve the generalization performance of the model.

- Hyperparameter Tuning

Random Forest has hyperparameters that control the number of trees in the forest, the maximum depth of the trees, and the number of features to consider at each split. These hyperparameters can be tuned using techniques like grid search or random search to optimize model performance.

- Prediction

Once the Random Forest model is trained, it can be used to make predictions on new data points by aggregating the predictions of all trees in the forest.

Random Forest Regression is robust, versatile, and less prone to overfitting compared to individual decision trees. It can capture complex relationships in the data and handle high-dimensional feature spaces effectively.

Now, let's see an example of Random Forest Regression in Python using the randomforestregressor class from scikit-learn library:

- We import the necessary libraries and modules from scikit-learn

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_squared_error, r2_score

- We generate synthetic data using the make_regression function from scikit-learn.

X, y = make_regression(n_samples=1000, n_features=1, noise=20, random_state=42)

- We split the data into training and testing sets using the train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

- We create an instance of the RandomForestRegressor class and fit it to the training data.

model = RandomForestRegressor(n_estimators=100, max_depth=5, random_state=42)

model.fit(X_train, y_train)

- We make predictions on the test data using the predict

y_pred = model.predict(X_test)

- We evaluate the model's performance using mean squared error (MSE) and R-squared.

mse = mean_squared_error(y_test, y_pred)

r2 = r2_score(y_test, y_pred)

print("Mean Squared Error:", mse)

print("R-squared:", r2)

- Finally, we visualize the actual versus predicted values using a scatter plot.

plt.figure(figsize=(10, 6))

plt.scatter(X_test, y_test, color='blue', label='Actual')

plt.scatter(X_test, y_pred, color='red', label='Predicted')

plt.xlabel('X')

plt.ylabel('y')

plt.title('Random Forest Regression')

plt.legend()

plt.show()

This example demonstrates how to use Random Forest Regression for a simple regression task. You can adjust hyperparameters like n_estimators (number of trees), max_depth (maximum depth of trees), and others to control the model's complexity and optimize its performance.